Method

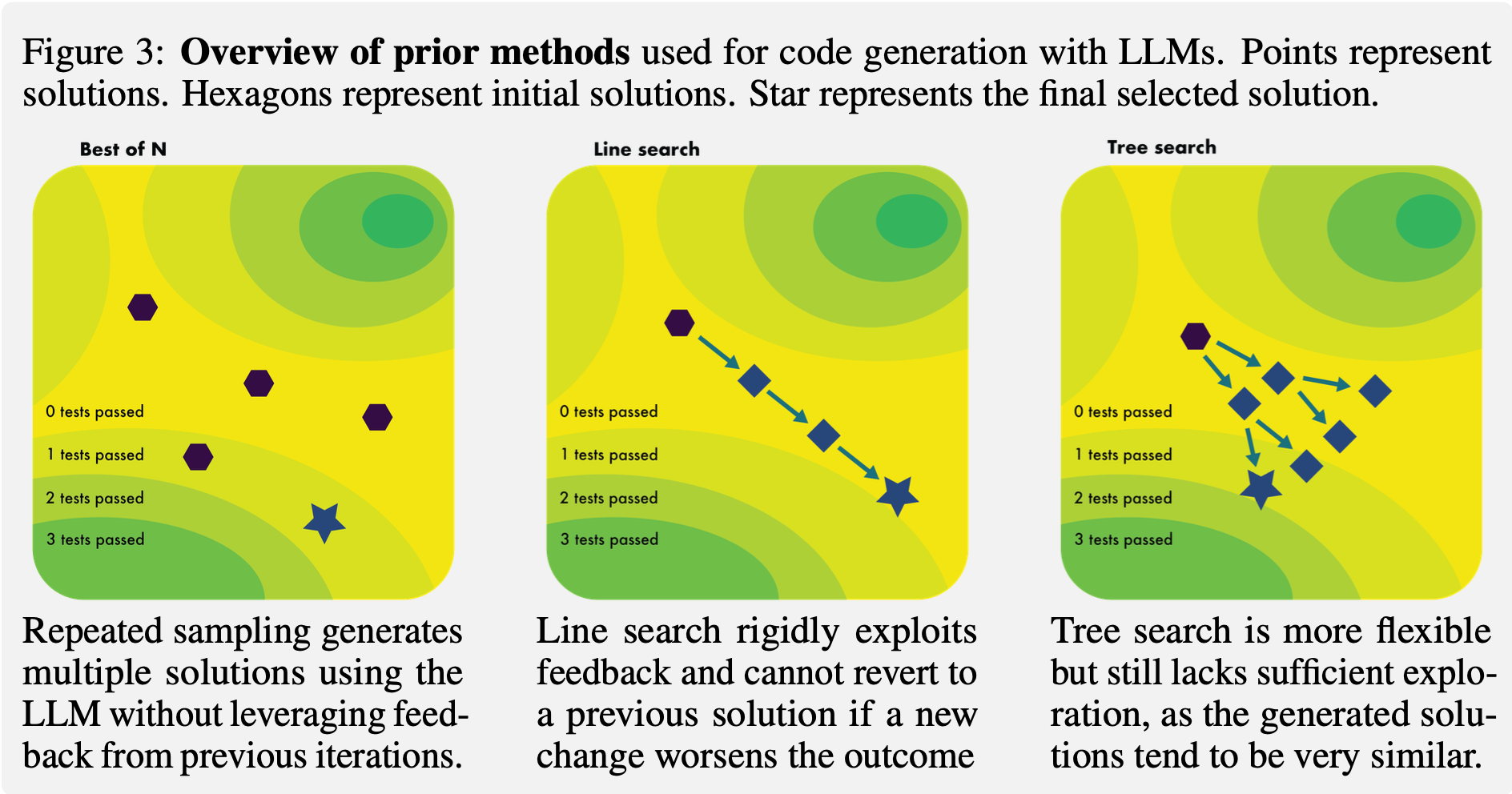

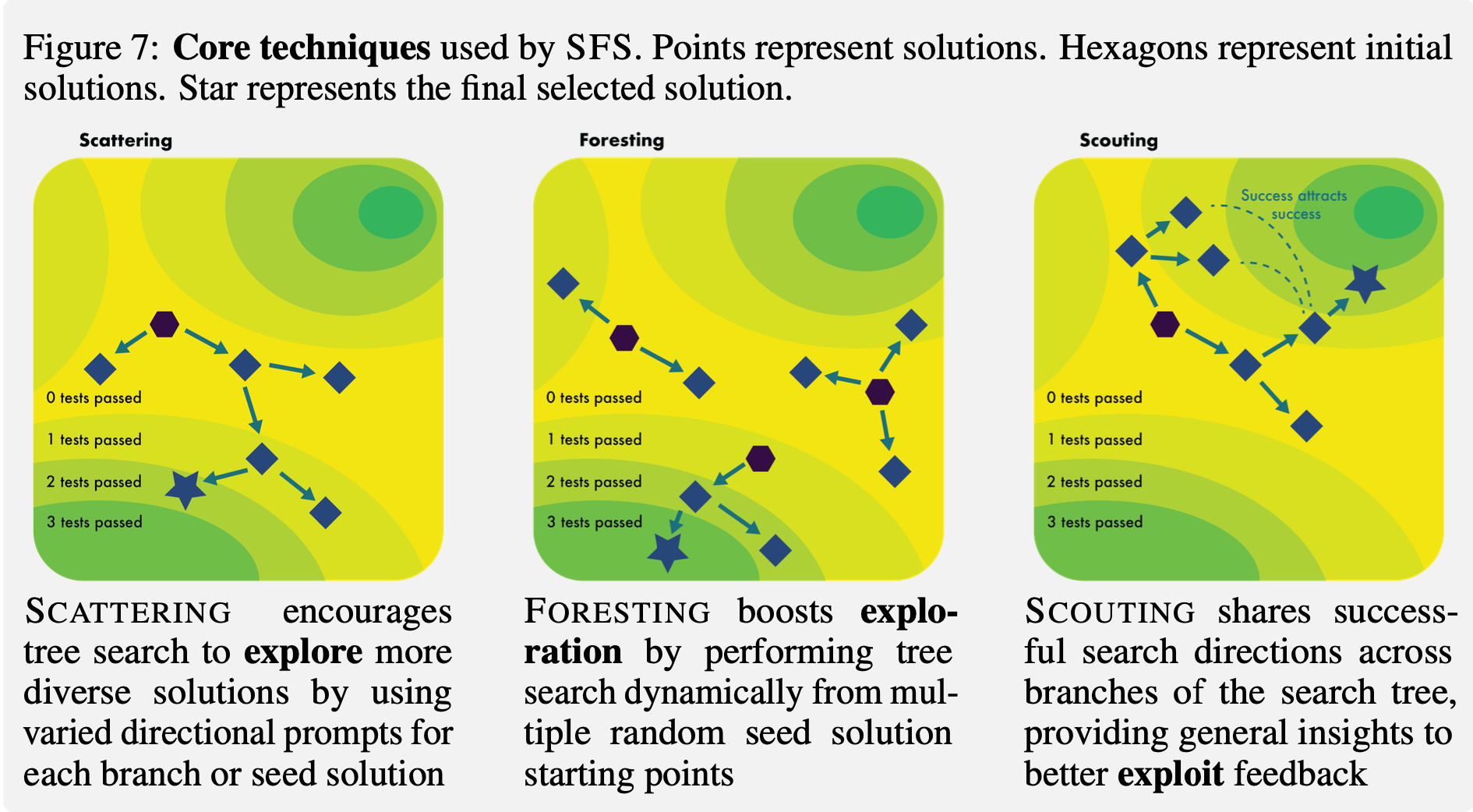

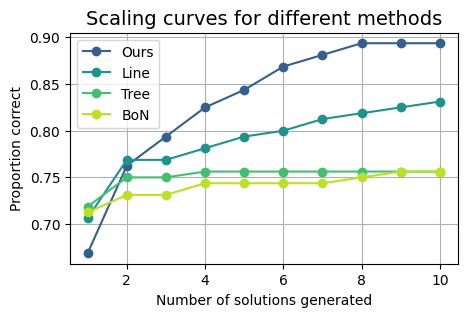

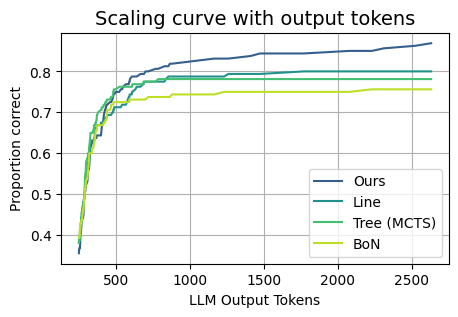

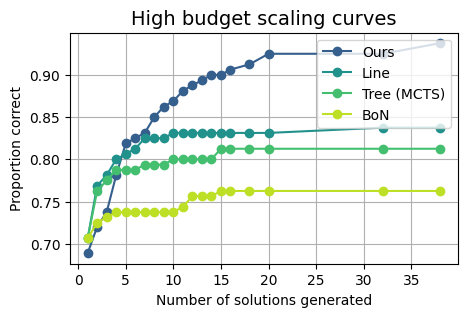

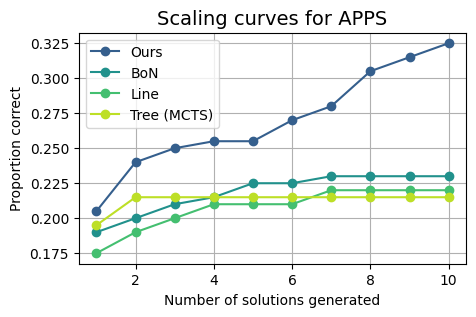

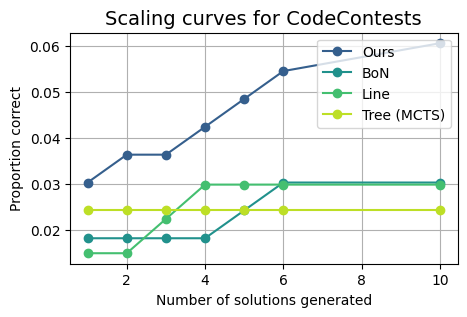

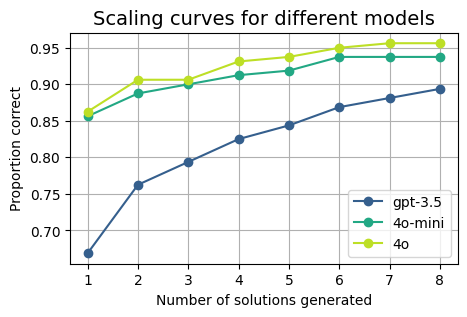

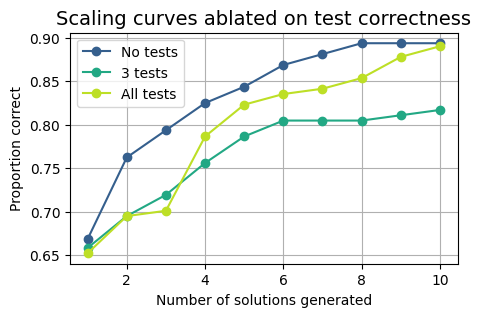

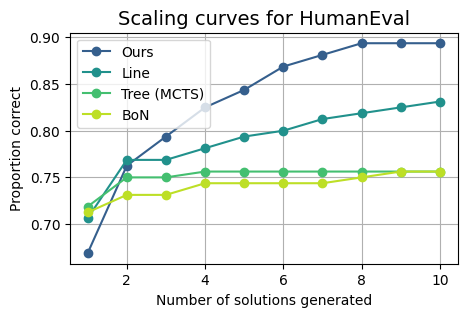

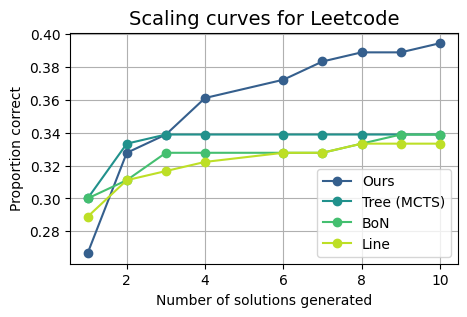

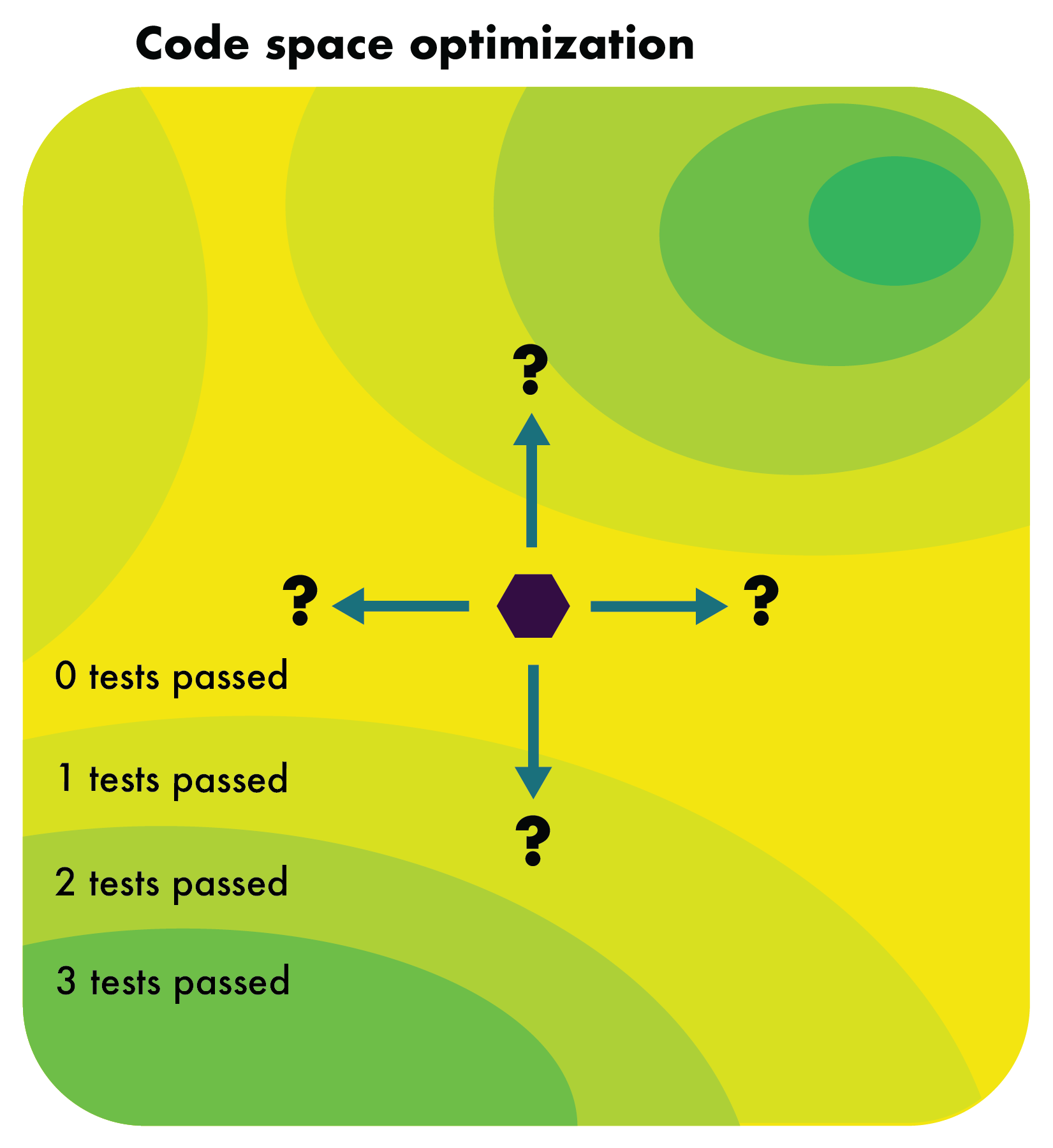

Our approach treats code generation as a black-box optimization problem in which each generated function or program is an element in the code space. The objective is to find a solution passing all hidden tests. We enhance tree search to balance exploration (Scattering) and exploitation (leveraging partial successes). Specifically:

- Scattering: Before branching from a parent solution, the LLM proposes multiple textual “directions” for improvement, ensuring children solutions differ significantly and helping to escape local optima.

- Foresting: We use multiple “seed” solutions and dynamically choose which seeds to expand next. Each seed has its own search tree, reducing the risk of refining a single flawed seed.

- Scouting: Global insights about promising or unpromising directions are shared across branches so that good directions get reinforced and unproductive directions are minimized.

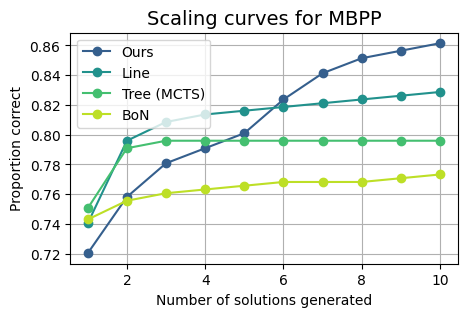

Altogether, SFS finds correct solutions faster and exploits verification signals (like partial test passes) more effectively than simpler inference approaches.